I had a pretty epic chat with Hallow’s co-founder, Alex Jones that got me thinking about several topics. If you haven’t already, check it out here. Towards the end of the chat, I ask Alex if he thinks an advanced version of AI could become a new form of god in the near future. The way I presented the scenario, which caught Alex by surprise was as follows:

There are many definitions of god, and one I frequently heard while growing up was that god is:

Omnipotent → All-powerful

Omniscient → All-knowing

Omnipresnet → Everywhere at the same time

With this setup in mind, and considering the latest advancements in AI (with new developments emerging literally every single week), it’s not far-fetched to question whether we could soon meet all three conditions.

Omnipotent

What we’re referencing here is something close to Artificial General Intelligence (AGI): taking the most powerful models we can think of today and applying them across various cognitive abilities to replicate human-level intelligence. The reality is, I don’t think we need to get that close to AGI to start sensing some of these god-like features in certain AI applications like ChatGPT.

The timeline for AGI is uncertain but the progress in AI research and development is undeniable. Models are getting faster, cheaper, and better by the minute.

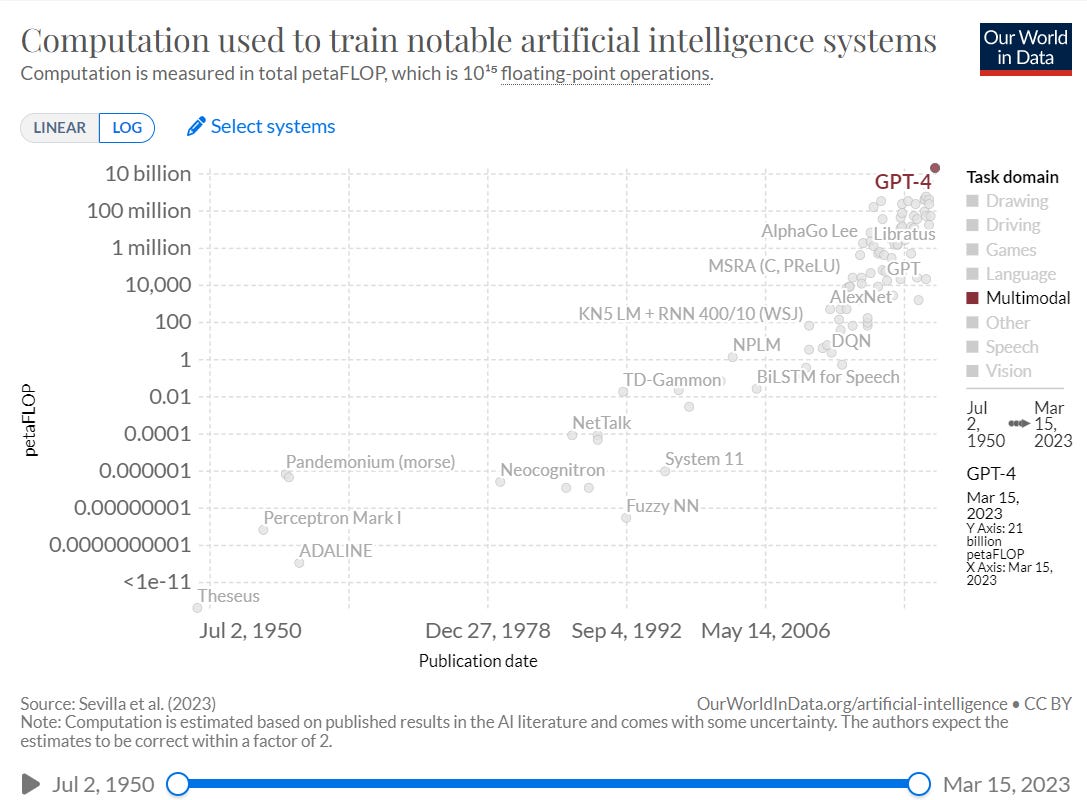

R&D for AI has experienced a remarkable surge, with the number of patents filed in '21 skyrocketing to 30 times higher than in 2015. Training computation has also witnessed exponential growth, as shown below (mind you, this graph is on a logarithmic scale; otherwise, GPT4 would be the only point far and to the right).

In other words, computing power growth has been staggering while the cost to train these models are simultaneously collapsing…

But beyond petaFLOPs, parameters, and the full cocktail of machine learning jargon, what’s important here is how well can these AI models actually perform. Well, GPT-4 is as powerful as it gets. I heard somewhere it might be the most complex software humanity has ever produced so far.

For instance, GPT-4 has already shown human-level performance in different settings, including professional benchmarks. Not only it passed most of the dozens of human exams they run the tests on (the below is just a snippet, see full paper here) but it crushed historically tough exams like the BAR (90%), LST (88%), and others. This is your sign to share this post with your obnoxious lawyer friends.

Still quite not on par with human performance in many real-world scenarios but getting darn close, really fast. And this is happening today, imagine the benchmarks and records that will be beaten in 12 months, 3 years,… within our lifetime?

Sam Altman, co-founder of OpenAI, said in this interview he wouldn’t be surprised if GPT-10 turned out to be a true AGI (we’re currently at GPT-4, and GPT-3 was launched only a few months ago). These things are not extrapolable but they do leave room for the imagination to fly a bit and think about the speed at which things could happen.

A side note on power… the notion of Sam Altam as Frodo Baggings from “The Lord of the Rings” keeps coming back to me. Not because Sam has a quest to destroy anything, but rather because he’s tasked with the responsibility of carrying one of the most powerful artifacts that could have significant implications for the world. As one of a handful of humans that gets to decide the direction of AGI, just like Frodo, Sam will face constant internal conflicts and temptations, struggle with corrupting influence, and will need to show a tremendous strength of character. OpenAI’s mission is to make sure AGI benefits all of humanity. Tough balancing act.

OpenAI’s charter and its other blog posts are worth a read.

Omniscient

Until recently, the closest thing to an oracle we had in the digital age was Google. In ancient times, an oracle was a person, often a priest or priestess, who served as a conduit for divine communication. By asking all sorts of questions, people would seek guidance from the oracle and they would obtain answers that were always believed to be true, as they were coming straight from the gods.

In 2006, both Oxford English and the Merriam-Webster dictionaries officially recognized “Google” as a verb. That’s when you know you’ve made it. You become a verb. What started as an innocent expression in 1998 on one of Larry Page’s (Google co-founder) product updates two months before launch, ended up becoming a ubiquitous word we use on a daily basis. “Who was the 4th President of the US? Google it!” “I need a recipe for this Sunday’s dinner. Let me Google something.” “This company I’m doing research on seems sketchy. Have you Googled it yet?” Google became the world’s repository of information and it had such a profound impact, that it even affected our language.

Enter ChatGPT. One million users in five days, one BILLION monthly visitors per month in March, just 4 months after its release… Can we skip to the part where we start ChatpGPT’ing things? A bit of a mouthful (my wife keeps asking if I checked with “ChadGPT”) so maybe it ends up being a different verb, but I believe we’ve reached a tipping point in terms of cultural significance with AI. With or without Oxford’s dictionary, ChatGPT has done in months what took Google almost a decade to achieve. It has permeated the cultural zeitgeist and there’s no turning back. People are using AI as personal trainers, pediatricians, psychologists, teachers, and even pastors or priests!

The process of ChatGPT’ing things actually resembles the oracle encounters more than Googling things. It’s natural. A back-and-forth conversation with the all-knowing entity on the other side of the screen.

Omnipresent

Can’t believe we’re more than halfway through this post on god and AI and I just thought of referencing Homo Deus. I read it back in 2016 and served as an inspiration to move away from generalist financial investing to specializing in technology. The book is an essay on what could happen if we continue down the same path. Back then it scared me a bit, mostly because I realized no matter how much I liked or disliked these future potential scenarios, I wouldn’t be able to control them or do much about them.

“The rise of AI heralds a new era of unprecedented potential. Machines are not only becoming more intelligent, but they are also capable of processing vast amounts of data at speeds and scales far beyond human capacity. This gives AI the ability to assist us in solving complex problems, making predictions, and enhancing our decision-making processes. From healthcare to finance, from governance to scientific research, AI holds the promise of revolutionizing various domains of human activity”Re-reading some of the passages, some of the predictions feel eerily timely.

AI is already breaking havoc with well-established tech companies in very different segments. Take ChatGPT for example. The Information reported there are at least 13 different software companies being affected by the chatbot’s quick adoption. Chegg, an online education company, mentioned during their last earnings call that ChatGPT is “having an impact on our new customer growth rate” as students embrace the new study buddy in town.

Others are embracing AI. If you can’t beat them, join them, right? Recently, Snapchat rolled out its own AI chatbot dubbed My AI. Running on the latest version of OpenAI’s GPT, and customized for Snapchat, My AI began as an experimental feature for some users but is now available for their entire user base. Now it’s doubling down and starting to include sponsored links during the conversation with the bot. For example, if you ask the chatbot what to have dinner tonight, it might give you the address of a specific sponsored restaurant.

Some projects are taking things even further. Auto-GPT is one of them. Think of Auto-GPT as AI bots that run by themselves, and complete tasks for you. It uses GPT-4 to act autonomously. Yep, a bot running on its own, with minimal human intervention, and can self-prompt. Instead of you asking (prompting) ChatGPT for XYZ, then following up with additional prompts until you get the desired outcome, with Auto-GPT you state the end goal and that’s it. The AI will automatically self-construct the questions until it gets to the desired outcome and the task is complete. Insane.

The use cases are endless. Customer service (can have fluent conversations with customers and cross or up-sell them services self-executing the transactions at the same time); social media (not only create the content but also schedule the post and reply to messages); heck, you could even plan most of your next vacations booking flights, tours, and hotels through your AI agent!

AI is a liquid concept. It could be touching many different things even if we don’t know it. When was the last time you were really “offline”? No phone, no smart TV, no Netflix, no Google Maps. The old adage of every company will be an Internet or mobile or pick-your-paradigm-shift-favorite will also hold for AI. Even if not all of the companies will use them right away, their employees will for sure do and will subsequently push the innovation from within.

We’re already on track. AI will be everywhere and at the same time. Omnipresent.

So what?

In my interview with Hallow’s CEO we discussed the role of tech in modern-day spirituality and the relationship between AI and religion. But I’m even more interested in the broader implications if we’re moving towards AGI: what sort of discussions should we be having as a society? At work? With friends?

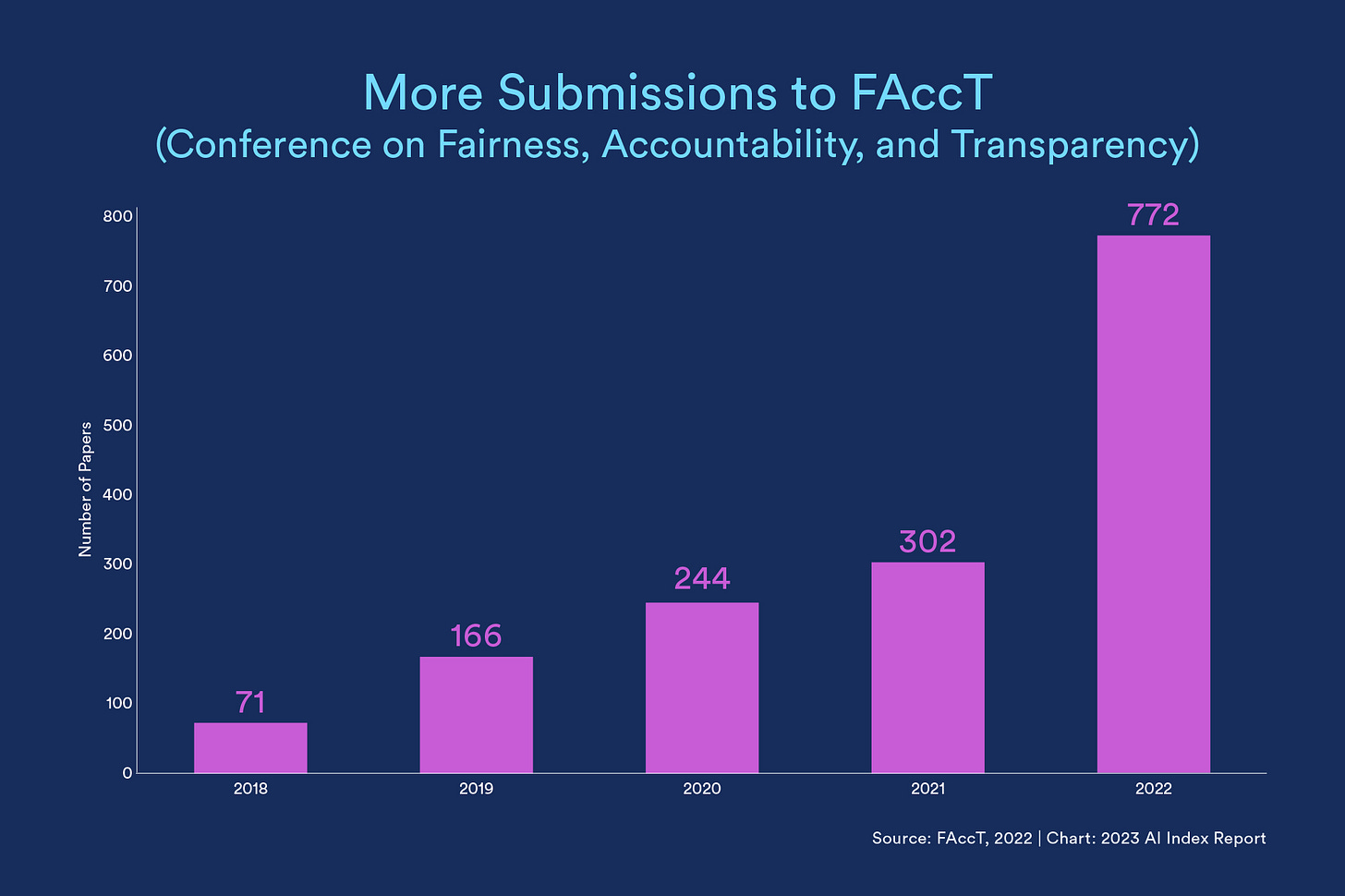

The rise in AI controversies has sparked greater attention towards AI ethics. The number of related papers has doubled from 2021 to 2022 and has 10x since 2018.

Elon Musk and other AI leaders are calling for a 6-month pause on AI developments. Thousands have allegedly signed an open letter, citing “risks to society and humanity as shown by extensive research”. You can read the full letter here. Titled “Pause Giant AI Experiments”, the letter is littered with rhetorical questions like “Should we automate away all the jobs… Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization?”. They are basically calling for a détente and AI controls. Not that different from the détente during the Cold War between the US and Soviet Union in the 70s which included increased discussions on arms control. The arms control aimed to prevent a catastrophic nuclear war with the potential to threaten humanity's existence. Rings a bell? Not sure how enforceable a “pause on AI” could be, but it begs the question, are we all thinking hard enough about these matters?

So far, OpenAI’s stance has been to expose the general public to the latest AI advancements as soon as possible. Even if they are not in their final form, they would rather have us experimenting with increasingly better versions of AI than getting punched in the face with one BIG announcement coming out of the blue.

“We expect powerful AI to make the rate of progress in the world much faster, and we think it’s better to adjust to this incrementally. A gradual transition gives people, policymakers, and institutions time to understand what’s happening, personally experience the benefits and downsides of these systems, adapt our economy, and to put regulation in place. It also allows for society and AI to co-evolve, and for people collectively to figure out what they want while the stakes are relatively low.”I understand why Sam is doing this and I think it’s a very sensible and reasonable approach. It’s also a hedge and a way to share the responsibility with others, even if it means sharing the profits by giving away some of their trade secrets. The one important caveat to this soft landing approach is a potential ‘slippery slope effect’ coming into play. The idea behind this concept is that a seemingly minor or acceptable action, if allowed, can set off a chain reaction that ultimately leads to a MAJOR change vs the baseline.

Let me be more graphic. Imagine you have a pot of boiling water. If you were to drop a frog into it, the frog would immediately sense the danger and jump right out. It knows it's hot and wants to save itself.

But here's the interesting part: if you place the frog in a pot of cool water and slowly increase the heat, the frog won't realize what's happening. As the temperature gradually rises, the frog adjusts to each of the temperature changes. Before long, the water becomes scorching hot, but the frog doesn't notice. It's too late by the time it realizes it's in boiling water and tries to escape.

This is a metaphor, scientifically inaccurate, but a useful cautionary tale. If we just get used to the gradual, yet steady release of ever more powerful AI systems without thinking about its potential consequences, we might end up bathing in boiling water like the frog in the example above.

The question of “can AI become a new form of god” is preposterous by design and calls for an active role in the journey to AGI. We should understand what’s useful and highlight what makes us uncomfortable; we shouldn’t shy away from certain discussions just because they seem too futuristic, depressing, or morally challenging. Technology, and AI is no exception, has the potential to improve the human condition if used wisely. But we can’t let things merely happen to us, we should take responsibility and start having these conversations sooner rather than later.